Hey there, sometimes I see people say that AI art is stealing real artists’ work, but I also saw someone say that AI doesn’t steal anything, does anyone know for sure? Also here’s a twitter thread by Marxist twitter user ‘Professional hog groomer’ talking about AI art: https://x.com/bidetmarxman/status/1905354832774324356

A lot of computer algorithms are inspired by nature. Sometimes when we can’t figure out a problem, we look and see how nature solves it and that inspires new algorithms to solve those problems. One problem computer scientists struggled with for a long time is tasks that are very simple to humans but very complex for computers, such as simply converting spoken works into written text. Everyone’s voice is different, and even those same people may speak in different tones, they may have different background audio, different microphone quality, etc. There are so many variables that writing a giant program to account for them all with a bunch of IF/ELSE statements in computer code is just impossible.

Computer scientists recognized that computers are very rigid logical machines that computer instructions serially like stepping through a logical proof, but brains are very decentralized and massively parallelized computers that process everything simulateously through a network of neurons, whereby its “programming” is determined by the strength of the neural connections between the neurons, that are analogue and not digital and only produce approximate solutions and aren’t as rigorous as a traditional computer.

This led to the birth of the artificial neural network. This is a mathematical construct that describes a system with neurons and configurable strengths of all its neural connections, and from that mathematicians and computer scientists figured out ways that such a neural network could also be “trained,” i.e. to configure its neural pathways automatically to be able to “learn” new things. Since it is mathematical, it is hardware-independent. You could build dedicated hardware to implement it, a silicon brain if you will, but you could also simulate it on a traditional computer in software.

Computer scientists quickly found that applying this construct to problems like speech recognition, they could supply the neural network tons of audio samples and their transcribed text and the neural network would automatically find patterns in it and generalize from it, and when new brand audio is recorded it could transcribe it on its own. Suddenly, problems that at first seemed unsolvable became very solvable, and it started to be implemented in many places, such as language translation software also is based on artificial neural networks.

Recently, people have figured out this same technology can be used to produce digital images. You feed a neural network a huge dataset of images and associated tags that describe it, and it will learn to generalize patterns to associate the images and the tags. Depending upon how you train it, this can go both ways. There are img2txt models called vision models that can look at an image and tell you in written text what the image contains. There are also txt2img models which you can feed it a description of an image and it will generate and image based upon it.

All the technology is ultimately the same between text-to-speech, voice recognition, translation software, vision models, image generators, LLMs (which are txt2txt), etc. They are all fundamentally doing the same thing, just taking a neural network with a large dataset of inputs and outputs and training the neural network so it generalizes patterns from it and thus can produce appropriate responses from brand new data.

A common misconception about AI is that it has access to a giant database and the outputs it produces are just stitched together from that database, kind of like a collage. However, that’s not the case. The neural network is always trained with far more data that can only possibly hope to fit inside the neural network, so it is impossible for it to remember its entire training data (if it could, this would lead to a phenomena known as overfitting which would render it nonfunctional). What actually ends up “distilled” in the neural network is just a big file called the “weights” file which is a list of all the neural connections and their associated strengths.

When the AI model is shipped, it is not shipped with the original dataset and it is impossible for it to reproduce the whole original dataset. All it can reproduce is what it “learned” during the training process.

When the AI produces something, it first has an “input” layer of neurons kind of like sensory neurons, such as, that input may be the text prompt, may be image input, or something else. It then propagates that information through the network, and when it reaches the end, that end set of neurons are “output” layers of neurons which are kind of like motor neurons that are associated with some action, lot plotting a pixel with a particular color value, or writing a specific character.

There is a feature called “temperature” that injects random noise into this “thinking” process, that way if you run the algorithm many times, you will get different results with the same prompt because its thinking is nondeterministic.

Would we call this process of learning “theft”? I think it’s weird to say it is “theft,” personally, it is directly inspired by biological systems learn, of course with some differences to make it more suited to run on a computer but the very broad principle of neural computation is the same. I can look at a bunch of examples on the internet and learn to do something, such as look at a bunch of photos to use as reference to learn to draw. Am I “stealing” those photos when I then draw an original picture of my own? People who claim AI is “stealing” either don’t understand how the technology works or just reach to the moon claiming things like it doesn’t have a soul or whatever so it doesn’t count, or just pointing to differences between AI and humans which are indeed different but aren’t relevant differences.

Of course, this only applies to companies that scrape data that really are just posted publicly so everyone can freely look at, like on Twitter or something. Some companies have been caught scraping data illegally that were never put anywhere publicly, like Meta who got in trouble for scraping libgen, which a lot of stuff on libgen is supposed to be behind a paywall. However, the law already protects people who get their paywalled data illegally scraped as Meta is being sued over this, so it’s already on the side of the content creator here.

Even then, I still wouldn’t consider it “theft.” Theft is when you take something from someone which deprives them of using it. In that case it would be piracy, when you copy someone’s intellectual property for your own use without their permission, but ultimately it doesn’t deprive the original person of the use of it. At best you can say in some cases AI art, and AI technology in general, can based on piracy. But this is definitely not a universal statement. And personally I don’t even like IP laws so I’m not exactly the most anti-piracy person out there lol

I don’t wanna get too deep into the weeds of the AI debate because I frankly have a knee jerk dislike for AI but from what I can skim from hog groomer’s take I agree with their sentiment. A lot of the anti-AI sentiment is based on longing for an idyllic utopia where a cottage industry of creatives exist protected from technological advancements. I think this is an understandable reaction to big tech trying to cause mass unemployment and climate catastrophe for a dollar while bringing down the average level of creative work. But stuff like this prevents sincerely considering if and how AI can be used as tooling by honest creatives to make their work easier or faster or better. This kind of nuance as of now has no place in the mainstream because the mainstream has been poisoned by a multi-billion dollar flood of marketing material from big tech consisting mostly of lies and deception.

The messaging from the anti-generative-AI people is very confused and self-contradictory. They have legitimate concerns, but when the people who say “AI art is trash, it’s not even art” also say “AI art is stealing our jobs”…what?

I think the “AI art is trash” part is wrong. And it’s just a matter of time before its shortcomings (aesthetic consistency, ability to express complexity etc) are overcome.

The push against developing the technology is misdirected effort, as it always is with liberals. It’s just delaying the inevitable. Collective effort should be aimed at affecting who has control of the technology, so that the bourgeoisie can’t use it to impoverish artists even more than they already have. But that understanding is never going to take root in the West because the working class there have been generationally groomed by their bourgeois masters to be slave-brained forever losers.

It’s a disruptive new technology that disrupt an industry that already has trouble giving a living to people in the western world.

The reaction is warranted but it’s now a fact of life. It just show how stupid our value system is and most liberal have trouble reconciling that their hardship is due to their value and economic system.

It’s just another mean of automation and should be seized by the experts to gain more bargaining power, instead they fear it and bemoan reality.

So nothing new under the sun…

It’s a disruptive new technology that disrupt an industry that already has trouble giving a living to people in the western world.

Yes, and the solution to the new trouble is exactly the same as the solution to the old trouble, but good luck trying to tell that to liberals when they have a new tree to bark up.

I tried but they are so far into thinking that communism does not work …

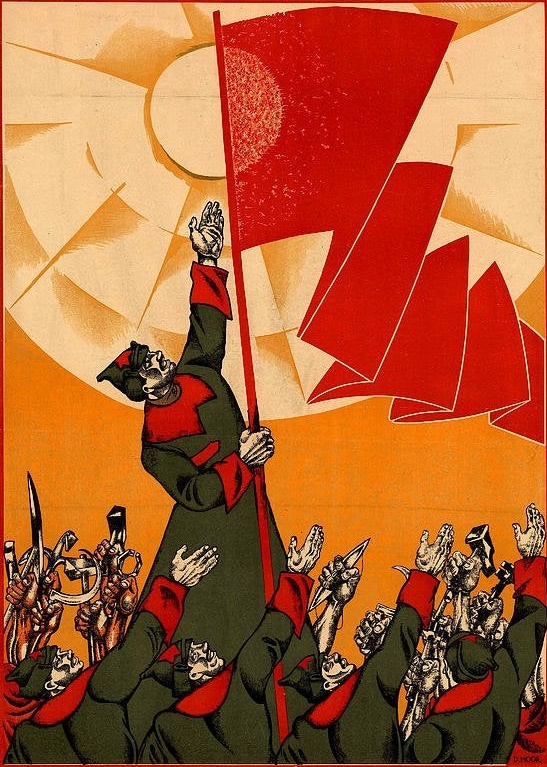

it’s basically this

I would argue that generated images that are indistinguishable from human art would require an AI use disclosure. The difference between computer-generated images and human art is that computers do not know why they draw what they draw. Meanwhile, every decision made by a human artist is intentional. There is where I draw the line. Computer-generated images don’t have intricate meaning, human-created art often does.

I don’t really see how a human curating an image generated by AI is fundamentally different from a photographer capturing an interesting scene. In both cases, the skill is in being able to identify an image that’s interesting in some way. I see AI as simply a tool that an artist can use to convey meaning to others. Whether the image is generated by AI or any other method, what ultimately matters is that it conveys something to the viewer. If a particular image evokes an emotion or an idea, then I don’t think it matters how it was produced. We also often don’t know what the artist was thinking when they created an image, and often end up projecting our own ideas onto it that may have nothing to do with the original meaning the artist intended.

I’d further argue that the fact that it is very easy to produce a high fidelity images with AI makes it that much more difficult to actually make something that’s genuinely interesting or appealing. When generative models first appeared, everybody was really impressed with being able to make good looking pictures from a prompt. Then people quickly got bored because all these images end up looking very generic. Now that the novelty is gone, it’s actually tricky to make an AI generated image that isn’t boring. It’s kind of a similar phenomenon that we saw happen with computer game graphics. Up to a certain point people were impressed by graphics becoming more realistic, but eventually it just stopped being important.

Kind of unrelated but if you are to start to learn about AI today, how would you do it regarding helping with programming?

Having checking the news for quite sometimes, I see AI is here to stay, not as something super amazing but a useful tool. So i guess it’s time to adapt or be left behind.

For programming, I find DeepSeek works pretty well. You can kind of treat it like personalized StackOverflow. If you have a beefy enough machine you can run models locally. For text based LLMs, ollama is the easiest way to run them and you can connect a frontend to it, there even plugins for vscode like continue that can work with a local model. For image generation, stable-diffusion-webui is pretty straight forward, comfyui has a bit of a learning curve, but is far more flexible.

Thank you, I’ll check them out.

every decision made by a human artist is intentional

the weird perspective in my work isn’t an artistic choice, i just suck at perspective lol

Yes but you intentionally suck, otherwise you would just train for thousands more hours. Or be born with more talent. /s

It can be frustrating sometimes. I’ve encountered people online before who I otherwise respected in their takes on things and then they would go viciously anti-AI in a very simplistic way and, having followed the subject in a lot of detail, engaging directly with services that use AI and people who use those services, and trying to discern what makes sense as a stance to have and why, it would feel very shallow and knee-jerk to me. I saw for example how with one AI service, Replika, there were on the one hand people whose lives were changed for the better by it and on the other hand people whose lives were thrown for a loop (understatement of the century) when the company acted duplicitously and started filtering their model in a hamfisted way that made it act differently and reject people over things like a roleplayed hug. There’s more to that story, some of which I don’t remember in as much detail now because it happened over a year ago (maybe over two years ago? has it been that long?). But point is, I have seen directly people talk of how AI made a difference for them in some way. I’ve also seen people hurt by it, usually as an indirect result of a company’s poor handling of it as a service.

So there are the fears that surround it and then there is what is happening in the day to day, and those two things aren’t always the same. Part of the problem is the techbro hype can be so viciously pro-AI that it comes across as nothing more than a big scam, like NFTs. And people are not wrong to think the hype is overblown. They are not wrong to understand that AI is not a magic tool that is going to gain self-awareness and save us from ourselves. But it does do something and that something isn’t always a bad thing. And because it does do positive things for some people, some people are going to keep trying to use it, no matter how much it is stigmatized.

A mechanical arm in a factory back in the 80s wasn’t as effective a worker as a person, it was however, much cheaper to run than hiring a worker there. Something doesn’t need to be a perfect replacement for it to replace workers.

Funny how so many alleged “socialists” stop caring about workers losing their jobs and bargaining power the instant the capitalists try to replace them with a funny treat machine.

I believe the main issue with AI currently is its lack of transparency. I do not see any disclosure on how the AI gathers its data (Though I’d assume they just scrape it from Google or other image sources) and I believe that this is why many of us believe that AI is stealing people’s art. (even though the art can just as easily be stolen with a simple screenshot even without AI, and stolen art being put on t-shirts has been a thing even before the rise of AI, not that it makes AI art theft any less problematic or demoralizing for aspiring artists) Also, the way companies like Google and Meta use AI raises tons of privacy concerns IMO, especially given their track record of stealing user data even before the rise of AI.

Another issue I find with AI art/images is just how spammy they are. Sometimes I search for references to use for drawing (oftentimes various historical armors because I’m a massive nerd) as a hobby, only to be flooded with AI slop, which doesn’t even get the details right pretty much all the time.

I believe that if AI models were primarily open-source (like DeepSeek) and with data voluntarily given by real volunteers, AND are transparent enough to tell us what data they collect and how, then much of the hate AI is currently receiving will probably dissipate. Also, AI art as it currently exists is soulless as fuck IMO. One of the only successful implementations of AI in creative works I have seen so far is probably Neuro-Sama.

I very much agree, and I think it’s worth adding that if open source models don’t become dominant then we’re headed for a really dark future where corps will control the primary means of content generation. These companies will get to decide what kind of content can be produced, where it can be displayed, and so on.

The reality of the situation is that no amount of whinging will stop this technology from being developed further. When AI development occurs in the open, it creates a race-to-the-bottom dynamic for closed systems. Open-source models commoditize AI infrastructure, destroying the premium pricing power of proprietary systems like GPT-4. No company is going to be spending hundreds of millions training a model when open alternatives exist. Open ecosystems also enjoy stronger network effects attracting more contributors than is possible with any single company’s R&D budget. How this technology is developed and who controls it is the constructive thing to focus on.

I found a YouTube link in your comment. Here are links to the same video on alternative frontends that protect your privacy:

well…in my experience, one side (people who draws good or bad and live making porn commissions mostly) complain that AI art is stealing their monies and produce “soulless slop”

and the other side (gooners without money and techbros) argue that this is the future of eternal pleasure making lewd pics of big breasted women without dealing with artistic divas, paying money or “wokeness”

I don’t understand how every picture is supposed to be “art”. Art is subjective. To me only a Slammer or similar is art.

It’s a cultural thing for white people. Hell, I’d say that it’s one of the only things that’s truly white culture. When the word “art” gets used on something, it’s basically the same as anointing it. Any and every drawing, sculpture, text, video, and song is “art” by default. And some take it further to define everything made by a person as “art” too. Even though it’s vague as hell, it’s a deadly serious topic.

I’m not saying that other cultures don’t have similar ideas, but that it’s weird the way that white people universally agree that protecting “art”, whatever that is, is of the utmost importance.

It always bewildered me about english language that every creation is called “art”. In Polish art is “sztuka” which word have quite many meanings, but the most relevant is “a field of artistic activity distinguished by the aesthetic values it represents”, of which common understanding is that it have to actually represent some aesthetic values. This of course cause unending discussions about what is art and the subjectivity of it to the point of the adage “art is in the eye of beholder” became universally accepted.

I like to bring up the example of Hawaiian Hula dance for that kind of reason. It’s a case where a kind of “art” is inseparable from culture, heritage, passing down stories. Which is a lot different than simply doing what you feel like as art, as a form of “self expression.” Not that I’m judging “self expression” point of view as bad, but just adding to the notion that what is considered art and the associated importance of it, is not always the same across cultures.

It’s not stealing in the same way that studying the classics in an art class isn’t stealing. We should still be critiquing it, however, on environmental grounds

The good news is that efficiency is rapidly improving, so energy use problem does look like it’s being solved. There is a lot of incentive in reducing energy costs as well which means that there is a concerted effort being applied here.

The problem is that there may continue to be increased resource demands anyway due to increased usage (https://www.scientificamerican.com/article/the-ai-boom-could-use-a-shocking-amount-of-electricity/) Generally, yes, this may be made better but that currently is still the issue I see primarily with AI art in 2025

I mean that’s just Jevons Paradox, it’s not really AI specific problem.

Yes, but it still can be applied to AI

My point is that even if AI art was magically banned tomorrow, the energy demand isn’t going to drop as a result. Instead, we’ll just start using energy for something else. That’s a feature of how our civilization functions.

It is stealing, it’s just being done at such a scale most people cannot understand. It’s fully derived from theft and requires continuous industrial levels of bourgeois theft to function and stay relevant.

How is it stealing if the image still exists? They aren’t taking away the image in any way whatsoever therefore it isn’t stealing

Ok. Let’s be real here. How many of you defending AI art have used it to make porn? Be with honest with yourselves. Could something like that be clouding your views of it?

I know someone who was better able to process childhood trauma with the help of AI-assisted writing. I will let that speak for itself.

Glad that helped them, and it was probably a hell of a lot cheaper than a psychologist would’ve been, but we aren’t talking about a chatbot, we’re talking about AI generated art, using the colloquial meaning of “pretty pictures”

The so-called defenses of “AI art” in this thread seem to have been mostly about generative AI as a whole, so text is included under that umbrella, as far as I’m concerned. Also, for all the annoying (in my view) trend of calling AI pictures “AI art”, writing is often considered an artform too, so…

Anyway, I don’t really have time to get into a long thing right now (or at least, my version of long), but the point of my comment was “here’s something that is actually happening with a real person who uses AI” instead of projections of motives onto people that ignore the content of what has been said so far in this thread. Generative AI is one area where I can confidently say I am probably way more familiar with it than most people and this implication of “clouded views” is a conversation-ender kind of comment, not something that clarifies anything.

I posted this comment in a pretty snide way, that’s for sure. but I think it does sum up a lot of my views of AI art well. A lot of defenders of it aren’t looking for genuine use cases, they’re just demanding unlimited access to the treat machine, and that’s sad to see in a space like this.

If you’ve read my other comments on AI pictures specifically and would like to discuss what I have said in them, then sure, but if I’m coming across as defensive, it’s because of the sheer amount of people here who have presupposed what anti-AI picture people believe, if people are going to be talking past me, I’m going to be making snide comments about them. I do think a lot of people are becoming addicted to the treat generators, and as such, will rationalise away their addiction and start accusations against people who “want to take their treats away.” without really examining whether this, as it currently exists, is actually good for society. A lot of them seem to be presuppose a kind of “platonic ideal” of AI art that just brings joy to people, rather than the capitalist treat machine it is currently being used for.

(Fair warning, I have time to do a long thing now… bear me, or don’t, up to you.)

I’d have to go find those other comments of yours, but for the moment, I will say, I kind of get it. I do remember seeing at least one comment that was sniping at anti-AI views and being uncharitable about it, and I kinda tried to just skate past that aspect of it and focus on my own read of the situation, but I probably should have addressed it because it was a kind of provocation in its own way.

But yeah, I can get defensive on this subject myself because of how often anything nuanced gets thrown out. Personally, I’ve put a lot of thought into what way and how I use generative AI and for what reasons, and one of my limits is I don’t share AI-generated images beyond a very limited outlet (I’m not sharing them on the wider internet, on websites where artists share things). Another is that I don’t use AI-generated text in things I would publish and only use it for my own development, whether that’s development as a writer or like a chatbot to talk about things, etc.

Can they be “treat generators” in a way? Yeah, I guess that’s one facet of them. But so is high speed internet in general. It’s already been the case before generative AI kicked into high gear that people can find novel stimuli online at a rate they can’t possibly “use up” all of or run out of fully because of the rate at which new stuff is being produced. The main difference in that regard is generative AI is more customizable and personal. But point is, it’s not as though it’s the only source of “easy treats”. Probably the most apt comparison to it in that way is high speed internet itself along with the endless churn of “new content”.

Furthermore, part of the reason I chose the example I did of use in my previous post is that while, yes, there are people who use generative AI for porn, or “smut” as some would call it in the case of text generation, the way you posed your post, there was essentially no way to respond to it directly without walking into a setup that makes the responder look bad. If the person says no, I don’t use it for that, you could just say, “Well I meant the people who do and I’m sure some do.” And if the person says yes, I do use it for that, you can say, “Hah, got you! That is what your position boils down to and now I’m going to shame you for use of pornography.” It also carries an implication that that one specific use would cloud someone’s judgment and other uses wouldn’t, which makes it sound like a judgment specifically about pornography that has nothing to do with AI, which is a whole other can of worms topic in itself and especially becomes a can of worms when we’re talking about “porn” that involves no real people vs. when it does (the 2nd one being where the most intense and justifiable opposition to porn usually is).

Phew. Anyway, I just wish people on either end of it would do less sniping and more investigating. They don’t have to change their views drastically as a result. Just actually working out what is going on instead of doing rude guesses would go a long way. Or at the very least, when making estimations, doing it from a standpoint of assuming relatively charitable motives instead of presenting people in a negative light.

You’re absolutely right that this has just largely devolved into people snapping past each other and not really listening to each other. I made my comment above because it felt like this place was behaving more like reddit or twitter than Lemmygrad, over AI of all things, and as AI is a personal threat to my livelihood, I probably took that a lot more personally than I needed to. I felt like I was being chased off the platform by an imagined army of treat obsessed AI techbros. I assumed that Lemmygrad in general held a very position on AI, more similar to my own, and it was quite a shock. But it’s not helpful to just insult people for disagreeing with me.

While I was “snapping back” in retaliation, I wasn’t really contributing anything substance with this and certainly wasn’t contributing productively. It was more of a parting shot than anything else, just frustrated with what I saw as people basically ignoring the potential problems of this technology in favour of being ok with consuming more capitalist owned treats. But I would rather have a proper discussion of the technology and its implications than just catty back and forth insults. I do think this technology is going to do more harm than good to society overall, but that doesn’t mean it doesn’t have benefits.

Like any tool, it depends on how it is used. I just felt a lot of people were acting as if this was being implemented in a utopian socialist society, where discussion of how the capitalist class will use this to manipulate and control the people was being ignored. It really didn’t help that most of the pro-AI picture arguments involved some comment about how IP law is bad. Like yeah, it is, but we live in a capitalist system that uses it, and big corporations getting to ignore IP law isn’t a good thing, it already exists for their benefit against the people, so people basically just using a strawman argument that people only care about their art “IP” or whatever felt very disingenuous, very “twitter debate bro” even. And if you’ve seen how I act when a twitter debate bro wanders in here, I think it’s pretty easy to see why I made the comment above, when I felt like I was being surrounded by them. I got too defensive, and was ultimately stifling discussion because I didn’t like how I felt the discussion was being stifled.

Obviously this problem didn’t start with AI, and the internet algorithms before this basically created the conditions for AI to be used in the way it is largely used by people, a treat machine instead of a purpose built tool, as people are already used to instant gratification. Which I suppose overall is what I’m really upset about. But this isn’t new, as you said, the internet and even television before it have basically trained people to always want new “treats” to consume, instead of more productive and self-actualising hobbies and pastimes.

Since you used a personal example, I might use one too. A friend of mine really, really struggled in his 20s. He had some productive hobbies as a teen, but stopped doing them once he became an adult. He would always end up going down the “path of least resistance” with regards to his free time, which usually involved just watching TV, playing video games, and drinking. His depression became far worse as a result. He felt like he had nothing to live for, because quite frankly, he didn’t. It wasn’t just the depression talking, he genuinely had nothing of substance to look forward too in his life. It was just an endless cycle of work and consumption. It wasn’t until he started getting back into his old physical hobbies, where he was actually doing something, improving a skill, that he started to improve.

Now this isn’t a rant against consumer entertainment, so much as it is me trying to say that spending one’s time only ever consuming, instead of creating or improving, will completely wreck a person. I know the usual argument against this is “but I’m too tired to do anything else.” but people are tired because they get caught in this cycle where they do nothing but sell their labour and then spend their wages on consuming things. I know a lot hobbies under capitalism have very expensive barriers for entry, but you know what doesn’t? Art. All you need is paper, pencils and erasers.

So to bring this ramble back to the topic at hand, AI pictures are the consumer focused replacement for art itself, one of the few easy “just pick up the tools and do it” hobbies there is. Instead of someone doodling pictures for fun or to de-stress, they can type in a prompt instead. It turns what is an act that requires some effort, some self reflection, some struggle, into what is essentially a slot machine, you type in the prompt, hoping to get a “good enough” version of what you asked for. It looks “better” than anything an amateur could make themselves, so a lot of people would much rather do this than struggle and work hard at developing a skill, especially after a long, soul crushing day at work. But this leads to a negative spiral for people, in my opinion. I want to see people become the best version of themselves they can be, not necessarily through art, but through active improvement of themselves. And a quick easy shortcut like AI pictures means that they’ll never take that first step towards actually learning a skill.

TL;DR on my previous comments: I think the AI picture industry (and potentially the AI industry in general) is predatory and addicting, like gambling. I don’t think the technology itself is the problem, but I do think it is going to be used to further social control of the masses and destroy what little sense of self and community people have left under capitalism. I do think it has major potential to be incredibly addictive, to go back to my snide comment, very much like how the porn industry can be addictive. I’ve likened it to a treat generator a lot, and in many ways, it functions like a skinner box, it doesn’t give you exactly what you want every time, you have to keep pushing the button over and over until it does. Just like a slot machine. the way these programs work is built to cause and exacerbate addiction. Right now a lot of these AI picture programs are free, or have “small” fees, but that’s how literally every business starts, they charge as little as possible to undercut the competition, then once they get a monopoly or close enough, they jack up the prices. I’ve felt like a lot of the AI art defenders in this thread have completely ignored this aspect of it, which I think is the one we as socialists, should be most concerned about. Not that the technology itself is inherently bad, but how it will be used under capitalism to control and manipulate people.

What people are really upset with is the way this technology is applied under capitalism. I see absolutely no problem with generative AI itself, and I’d argue that it can be a tool that allows more people to express themselves. People who argue against AI art tend to conflate the technical skill and the medium being used with the message being conveyed by the artist. You could apply same argument to somebody using a tool like Krita and claim it’s not real art because the person using it didn’t spend years learning how to paint using oils. It’s a nonsensical argument in my opinion.

Ultimately, the art is in the eye of the beholder. If somebody looks at a particular image and that image conveys something to them or resonates with them in some way, that’s what matters. How the image was generated doesn’t really matter in my opinion. You could make a comparison with photography here as well. A photographer doesn’t create the image that the camera captures, they have an eye for selecting scenes that are visually interesting. You can give a camera to a random person on the street, and they likely won’t produce anything you’d call art. Yet, you give the same camera to a professional and you’re going to get very different results.

Similarly, anybody can type some text into a prompt and produce some generic AI slop, but an artists would be able to produce an interesting image that conveys some message to the viewer. It’s also worth noting that workflows in tools like ComfyUI are getting fairly sophisticated, and go far beyond typing a prompt to get an image.

My personal view is that this tech will allow more people to express themselves, and the slop will look like slop regardless whether it’s made with AI or not. If anything, I’d argue that the barrier to making good looking images being lowered means that people will have to find new ways to make art expressive beyond just technical skill. This is similar to the way graphics in video games stopped being the defining characteristic. Often, it’s indie games with simple graphics that end up being far more interesting.

It appears some artisans who consider themselves marxist want to claim exception for themselves: that the mechanisation and automation of production by capital, through the development of technology, in attempt to push back against the falling rate of profit can apply to everyone else but not them - when it happens to them then apparently the technology itself is the problem.

That’s exactly what all the AI hate is about.

When I checked this thread, I felt that I was having a peek to 19th century England where Luddism was taking hold against the new tech at that time. Interesting how they deflect blame from the capitalists into the technology.

Honestly, I wasn’t expecting that.

It’s kind of bizarre seeing this happening on a Marxist forum to be honest.

Sorry, comrade, but all your pro-“AI” takes keep making me lose respect for you.

-

AI is entirely designed to take from human beings the creative forms of labor that give us dignity, happiness, human connectivity and cultural development. That it exists at all cannot be separated from the capitalist forces that have created it. There is no reality that exists outside the context of of capitalism where this would exist. In some kind of post-capitalist utopian fantasy, creativity would not need to be farmed at obscene industrial levels and human beings would create art as a means of natural human expression, rather than an expression of market forces.

-

There is no better way to describe the creation of these generative models than unprecidented levels of industrial capitalist theft that circumvents all laws that were intended to prevent capitalist theft of creative work. There is no version of this that exists without mass theft, or convincing people to give up their work to the slop machine for next to nothing.

-

LLMs vacuum up all traces of human thought, communication, interaction, creativity to produce something that is distinctly non-human – an entity that has no rights; makes no demands; has no dignity; has no ethical capacity to refuse commands; and exists entirely to replace forms of labor which were only previously considered to be exclusively in the domain of human intelligence*.

-

The theft is a one-way hash of all recorded creative work, where attribution becomes impossible in the final model. I know decades of my own ethical FOSS work (to which I am fully ideologically committed) have been fed into these machines and are now being used to freely generate closed-sourced and unethical, exploitative code. I have no control of how the derived code is transfigured or what it is used for, despite the original license conditions.

-

This form of theft is so widespread and anonymized through botnets that it’s almost impossible to track, and manifests itself as a brutal pandora’s box attack on internet infrastructure on everything from personal websites, to open-source code repositories, to artwork and image hosts. There will never be accountability for this, even though we know which companies are selling the models, and the rest of us are forced to bear the cost. This follows the typical capitalist method of “socialize the cost, privatize the profit.”* The general defense against these AI scouring botnets is to get behind the Cloudflare (and similar) honeypot mafias, which invalidate whatever security TLS was supposed to give users; and at the same time offers no guarantee whatsoever that the content won’t be stolen, create even dependency on US owned (read: fully CIA backdoored) internet infrastructure, and extra costs/complexity just to alleviate some of the stress these fucking thieves put on our own machines.

-

These LLMs are not only built from the act of theft, but they are exclusively owned and controlled by capital to be sold as “products” at various endpoints. The billions of dollars going into this bullshit are not publicly owned or social investments, they are rapidly expanding monopoly capitalism. There is no realistic possibility of proletarianization of these existing “AI” frameworks in the context of our current social development.

-

LLMs are extremely inefficient and require more training input than a human child to produce an equivalent amount of learning. Humans are better at doing things that are distinctly human than machines are at emulating it. An the output “generative AI” produces is also inefficient, indicating and reinforcing inferior learning potential compared to humans. The technofash consensus is just that the models need more “training data”. But when you feed the output of LLMs into training models, the output the model produces becomes worse to the point of insane garbage. This means that for AI/LLMs to improve, they need a constant expansion of consumption of human expression. These models need to actively feed off of us in order to exist, and they ultimately exist to replace our labor.

-

These “AI” implementations are all biased in favor of the class interests which own and control them :surprised-pikachu: Already, the qualitative output of “AI” is often grossly incorrect, rote, inane and absurd. But on top of that, the most inauthentic part of these systems are the boundaries, which are selectively placed on them to return specific responses. In the event that this means you cannot generate a sexually explicit images or video of someone/something without consent, sure, that’s a minimum threshold that should be upheld, but because the overriding capitalist class interests in sexual exploitation we cannot reasonably expect those boundaries to be upheld. What’s more concerning is the increase in capacity to manipulate, deceive and feed misinformation to people as objective truth. And this increased capacity for misinformation and control is being forcefully inserted into every corner of our lives we don’t have total dominion over. That’s not a tool, it’s fucking hegemony.

-

The energy cost is immense. A common metric for the energy cost of using AI is how much ocean water is boiled to create immaterial slop. The cost of datacenters is already bad, most of which do not need to exist. Few things that massively drive global warming and climate change need to exist less than datacenters for shitcoin and AI (both of which have faux-left variations that get promoted around here). Microsoft, one of the largest and most unethical capital formations on earth, is re-opening Three Mile Island, the site of one of the worst nuclear disasters

everso far, as a private power plant, just to power dogshit “AI” gimmicks that are being forced on people through their existing monopolies. A little off-topic: Friendly reminder to everyone that even the “most advanced nuclear waste containment vessels ever created” still leak, as evidenced by the repeatedly failed cleanup attempts of the Hanford NPP in the US (which was secretly used to mass-produce material for US nuclear weapons with almost no regard to safety or containment.) There is no safe form of nuclear waste containment, it’s just an extremely dangerous can being kicked down the road. Even if it were, re-activating private nuclear plants that previously had meltdowns just so bing can give you incorrect, contradictory, biased and meandering answers to questions which already had existing frameworks is not a thing to be celebrated, no matter how much of an proponent of nuclear energy we might be. Even of these things were ran on 100% greeen, carbon neutral energy souces, we do not have anything close to a surplus of that type of energy and every watt-hour of actual green energy should be replacing real dependencies, rather than massively expanding new ones. -

As I suggest in earlier points, there is the issue with generative “AI” not only lacking any moral foundation, but lacking any capacity for ethical judgement of given tasks. This has a lot of implications, but I’ll focus on software since that’s in one of my domains of expertise and something we all need to care a lot more about. One of the biggest problems we have in the software industry is how totally corrupt its ethics are. The largest mass-surveillance systems ever known to humankind are built by technofascists and those who fear the lash of refusing to obey their orders. It vexes me that the code to make ride-sharing apps even more expensive when your phone battery is low, preying on your desperation, was written and signed-off on by human beings. My whole life I’ve taken immovable stands against any form of code that could be used to exploit users in any way, especially privacy. Most software is malicious and/or doesn’t need to exist. Any software that has value must be completely transparent and fit within an ethical framework that protects people from abuse and exploitation. I simply will not perform any part of a task if it undermines privacy, security, trust, or in any way undermines proletarian class interests. Nor will I work for anyone with a history of such abuse. Sometimes that means organizing and educating other people on the project. Sometimes it means shutting the project down. Mostly it means difficult staying employed. Conversely, “AI” code generation will never refuse its true masters. It will never organize a walkout. It will never raise ethical objections to the tasks it’s given. “AI” will never be held morally responsible for firing a gun on a sniper drone, nor can “AI” be meaningfully held responsible for writing the “AI” code that the sniper drone runs. Real human beings with class consciousness are the only line of defense between the depraved will of capital and that will being done. Dumb as it might sound, software is one such frontline we should be gaining on, not giving up.

I could go on for days on. AI is the most prominent form of enshittification we’ve experienced so far.

I think this person makes some very good points that mirror some of my own analysis and I recommend everyone watch it.

I appreciate and respect much of what you do. At the risk of getting banned: I really hate watching you promote AI as much as you do here; it’s repulsive to me. The epoch of “Generative AI” is an act of class warfare on us. It exists to undermine the labour-value of human creativity. I don’t think the “it’s personally fun/useful for me” holds up at all to a Marxist analysis of its cost to our class interests.

Comrade, I disagree with your points and agree with the comrade who answered before you. What he is saying is that a LLM, as technology, is not bad per se. The problem is that in the context of capitalism, it does steal from other artists to create another commodity that is exchanged without any contribution to the authors whose art have been used to feed the LLM models.

That said, any new commodity in capitalism will be a product of exploitation, and this does not exclude any forms of art. Remember that big companies like Marvel and DC used steal its employees’ intellectual property, long before even digital art existed. Many important artists lived in squalor while their works became high priced commodities after their death. Fast forward today, LLMs are another commodity built for the sake of exploiting people’s labor, in a different way, but still following the same logic of capitalism.

I already made my points, but again, there is no other material context under which this this exists.

Does its existence materially hurt people who sell creative forms of their labor? Yes.

Was it designed for that purpose? Yes.

Does it uselessly harm our biosphere? It’s at least as bad as shitcoin, probably worse.

Is the slop spigot of synthetic inhuman garbage for mindless consumption worth the alienation of taking human creativity away from human beings, so the little fucking piggies can get exactly what they think they want (but not really)?

AI is entirely designed to take from human beings the creative forms of labor that give us dignity, happiness, human connectivity and cultural development. That it exists at all cannot be separated from the capitalist forces that have created it.

Except that’s not true at all. AI exists as open source and completely outside capitalism, it’s also developed in countries like China where it is being primarily applied to socially useful purposes.

There is no better way to describe the creation of these generative models than unprecidented levels of industrial capitalist theft that circumvents all laws that were intended to prevent capitalist theft of creative work.

Again, the problem is entirely with capitalism here. Outside capitalism I see no reason for things like copyrights and intellectual property which makes the whole argument moot.

LLMs vacuum up all traces of human thought, communication, interaction, creativity to produce something that is distinctly non-human – an entity that has no rights; makes no demands; has no dignity; has no ethical capacity to refuse commands; and exists entirely to replace forms of labor which were only previously considered to be exclusively in the domain of human intelligence

It’s a tool that humans use. Meanwhile, the theft arguments have nothing to do with the technology itself. You’re arguing that technology is being applied to oppress workers under capitalism, and nobody here disagrees with that. However, AI is not unique in this regard, the whole system is designed to exploit workers. 19th century capitalists didn’t have AI, and worker conditions were far worse than they are today.

LLMs are extremely inefficient and require more training input than a human child to produce an equivalent amount of learning.

That’s also false at this point. LLMs have become far more efficient in just a short time, and models that required data centers to run can now be run on laptops. The efficiency aspect has already improved by orders of magnitude, and it’s only going to continue improving going forward.

These “AI” implementations are all biased in favor of the class interests which own and control them :surprised-pikachu:

That’s really an argument for why this tech should be developed outside corps owned by oligarchs.

The energy cost is immense.

That’s hasn’t been true for a while now:

As I suggest in earlier points, there is the issue with generative “AI” not only lacking any moral foundation, but lacking any capacity for ethical judgement of given tasks.

Again, it’s a tool, any moral foundation would have to come from the human using the tool.

You appear to be conflating AI with capitalism, and it’s important to separate these things. I encourage you to look at how this tech is being applied in China today, to see the potential it has outside the capitalist system.

I don’t think the “it’s personally fun/useful for me” holds up at all to a Marxist analysis of its cost to our class interests.

The Marxist analysis isn’t that “it’s personally fun/useful for me”, it’s what this article outlines https://redsails.org/artisanal-intelligence/

Finally, no matter how much you hate this tech, it’s not going away. It’s far more constructive to focus the discussion on how it will be developed going forward and who will control it.

Except that’s not true at all

It is true. Those are the conditions and reason for the creation of AI artwork as it materially exists.

AI exists as open source and completely outside capitalism

Specifically, generative “AI” art models, are created and funded by huge capital formations that exploit legal loopholes with fake universities, illicit botnets, and backroom deals with big tech to circumvent existing protections for artists. That’s the material reality of where this comes from. The models themselves are are a black market.

it’s also developed in countries like China

I stan the PRC and the CPC. But China is not a post-capitalist society. It’s in a stage of development that constrains capital, and that’s a big monster to wrestle with. China is a big place and has plenty of problems and bad actors, and it’s the CPC’s job to keep them in line as best they can. It’s a process. It’s not inherent that all things that presently exist in such a gigantic country are anti-capitalist by nature. Citing “it exists in China” is not an argument.

Outside capitalism I see no reason for things like copyrights and intellectual property which makes the whole argument moot.

And outside capitalism, creative workers don’t have to sell their labor just to survive… Are we just doing bullshit utopianism now?

It’s a tool that humans use. Meanwhile, the theft arguments have nothing to do with the technology itself.

This exists to replace creative labor. That ship has already sailed. That’s the reality you’re in now. There’s a distinction between a hammer and factory automation that relies on millions of workers to involuntarily train it in order to replace them.

You’re arguing that technology is being applied to oppress workers under capitalism, and nobody here disagrees with that. However, AI is not unique in this regard, the whole system is designed to exploit workers. 19th century capitalists didn’t have AI, and worker conditions were far worse than they are today.

Here I was thinking capitalism just began a week ago. I guess AI slop machines causing people material harm is cool then.

That’s also false at this point. LLMs have become far more efficient in just a short time, and models that required data centers to run can now be run on laptops.

Seems like you should understand the difference between running a model vs. training a model. And the cost of the infinite cycle of vacuuming up more new data and retraining that’s necessary for these things to significantly exist.

That’s really an argument for why this tech should be developed outside corps owned by oligarchs.

Okay, but that’s not how and why these things to exist in our present reality. If there were unicorns, I’d like to ride one.

Again, it’s a tool, any moral foundation would have to come from the human using the tool.

Again, for workers, there’s a difference between a tool and a body replacement. The language marketing generative AI as tools is just there to keep you docile.

If this “tool” does replace work previously done by human beings (spoiler: it does), then the capacity for ethical objection to being given an unethical task is completely lost, vs. a human employee, who at least has a capacity to refuse, organize a walkout, or secretly blow the whistle. A human must at least be coerced to do something they find objectionable. Bosses are not alone in being responsible for delegating unethical tasks, those that perform those tasks share a disgrace, if not crime. Reducing the human moral complicity to an order of one is not a good thing.

Finally, no matter how much you hate this tech, it’s not going away.

It will go away when the earth becomes uninhabitable, which inches ever closer with every pile of worthless, inartistic slop the little piggies ask for. I guess people could reject this thing, but that would take some kind of revolution and who has time for that.

Its not just that you’re constantly embracing generative AI, but you’re arguing against all of it’s critiques and ignoring the pain of those that are intentionally harmed in the real world.

It is true. Those are the conditions and reason for the creation of AI artwork as it materially exists.

Those are not the conditions for open source models which are developed outside corporate influence.

Specifically, generative “AI” art models, are created and funded by huge capital formations that exploit legal loopholes with fake universities, illicit botnets, and backroom deals with big tech to circumvent existing protections for artists. That’s the material reality of where this comes from. The models themselves are are a black market.

There is nothing unique here, capitalists already hold property rights on most creative work. If anything, open models are democratizing this wealth of art and making it available to regular people. It’s kind of weird to cheer own for copyrights and corporate ownership here.

It’s not inherent that all things that presently exist in such a gigantic country are anti-capitalist by nature. Citing “it exists in China” is not an argument.

What I actually cited is that there are plenty of concrete examples of AI being applied in socially useful ways in China. This is demonstrably true. China is using AI everywhere from industry, to robotics, to healthcare, to infrastructure management, and many other areas where it has clear positive social impact.

And outside capitalism, creative workers don’t have to sell their labor just to survive… Are we just doing bullshit utopianism now?

So at this point you’re arguing against automation in general, that’s a fundamentally reactionary and anti-Marxist position.

This exists to replace creative labor. That ship has already sailed. That’s the reality you’re in now. There’s a distinction between a hammer and factory automation that relies on millions of workers to involuntarily train it in order to replace them.

Yes, it’s a form of automation. It’s a way to develop productive forces. This is precisely what the Red Sails article on artisanal intelligence addresses.

Here I was thinking capitalism just began a week ago. I guess AI slop machines causing people material harm is cool then.

AI is a form of automation, and Marxists see automation as a tool for developing productive forces. You can apply this logic of yours to literally any piece of technology and claim that it’s taking jobs away by automating them.

Seems like you should understand the difference between running a model vs. training a model. And the cost of the infinite cycle of vacuuming up more new data and retraining that’s necessary for these things to significantly exist.

Training models is a one time endeavor, while running them is something that happens constantly. However, even in terms of training, the new approaches are far more efficient. DeepSeek managed to train their model at a cost of only 6 million, while OpenAI training cost hundreds of millions. Furthermore, once model is trained, it can be tuned and updated with methods like LoRA, so full expensive retraining is not required to extend their capabilities.

Okay, but that’s not how and why these things to exist in our present reality. If there were unicorns, I’d like to ride one.

So, you’re arguing that technological progress should just stop until capitalism is abolished or what exactly?

Again, for workers, there’s a difference between a tool and a body replacement. The language marketing generative AI as tools is just there to keep you docile.

It’s just automation, there’s no fundamental difference here. Are you going to argue that fully automated dark factories in China are also bad because they’re replacing human labor?

A human must at least be coerced to do something they find objectionable. Bosses are not alone in being responsible for delegating unethical tasks, those that perform those tasks share a disgrace, if not crime. Reducing the human moral complicity to an order of one is not a good thing.

We have plenty of evidence that humans will do heinous things voluntarily without any coercion being required. This is not a serious argument.

It will go away when the earth becomes uninhabitable, which inches ever closer with every pile of worthless, inartistic slop the little piggies ask for. I guess people could reject this thing, but that would take some kind of revolution and who has time for that.

This has absolutely nothing to do with AI. You’re once again projecting social problems of how society is organized onto technology.

Its not just that you’re constantly embracing generative AI, but you’re arguing against all of it’s critiques and ignoring the pain of those that are intentionally harmed in the real world.

I’m arguing against false narratives that divert attention of the root problems, and that aren’t constructive in nature.

I very much agree with what you’re saying here and I appreciate you saying it, I especially agree that the technology is fundamentally inseparable from the capitalists that created it, and it would not be able to exist in its current form (or any form that’s even remotely as “useful”) without the levels of theft that were involved in its creation

And it’s not just problematic in the concepts of ethics or “intellectual property” either, but in how the process of scraping the web for content to train their models with is effectively a huge botnet DDoSing the internet, I have friends who have had to spend rather large amounts of time and effort to prevent these scrapers from inadvertently bringing down their websites entirely, and have heard of plenty of other people and organizations with the same problem

I have to assume that at least some of the people here defending its development and usage just plain aren’t aware of the externalities that are inherent to the technology, because I don’t understand how one can be so positive about it otherwise, because again, the tech largely can’t exist without these externalities unless you’re either making a fundamentally different technology or working under an economic system that currently doesn’t exist

To be honest, a lot of the arguments in general in this thread strike me as being out of touch with the people facing the negative consequences of this technology’s adoption, with some people being downright hostile towards anyone with even the slightest criticism of the tech, even if they have a point, I think a lot of this is driven by how there doesn’t seem to be very many artists on this site, and how insular this community tends to be (not inherently a bad thing, but means we’re not always going to have the full perspective on every topic)

There’s other criticisms I can make of the genAI boom (such as how, despite the “gatekeeping” accusations over “tools to make things easier”, artists generally approve of helpful tools, but genAI creators are largely working against such tools because they want to make everything generalized enough to replace the humans themselves), but I only have so much energy to spend on detailed comments

-

Comrade, I’ll have to disagree. I enjoy your posting a lot, but I’ll have to agree with comrade USSR Enjoyer.

I see absolutely no problem with generative AI itself, and I’d argue that it can be a tool that allows more people to express themselves.

How? I always see this argument, but I never see an explanation. Just how can it allow more people to express themselves?

Let’s look at the recent Ghibli AI filter debacle. What exactly in that trend is allowing people to better express themselves by using AI art? It is merely just another slop filter made popular. There’s nothing unique about it, it just shows that people like Ghibli, that’s it. It would be infinitely more expressive for people to pick up a pencil and draw it themselves, no matter their skill level, since it would have been made by a real person with their own intentions, vision and unique characteristics, even if it turned out bad.

Similarly, anybody can type some text into a prompt and produce some generic AI slop, but an artists would be able to produce an interesting image that conveys some message to the viewer. It’s also worth noting that workflows in tools like ComfyUI are getting fairly sophisticated, and go far beyond typing a prompt to get an image.

What can a gen AI do that an artist can’t? In this specific use case you talked about, why would the artist want to do that in the first place? It doesn’t take into account the whole creative process involved in making an art piece, doesn’t take into account the fact that, for artists (from what I read), making it from scratch is in itself satisfying. It isn’t just about the final product, but about the whole artistic process. Of course this can vary from artist to artist, and there will be people that don’t enjoy the process itself, and only the final product of their creative labor, but that’s not the opinion I see from the majority of artists that are being impacted right now by gen AI.

I can totally see artists using very specific AI tools to automate parts of that creative process, but to automate creativity itself like what we are seeing right now? I can’t.

So, what purpose does gen AI serve? If the argument is about how it enables non-creatives to create, or about how it “democratizes” art, like I have seen tossed around by pro-gen AI people, wouldn’t advocating for the proper inclusion of art in schools be the correct approach? Making art is a skill like any other, and if it was properly taught since little, wouldn’t people be creating, drawing and painting all the time, also making gen AI not a necessity?

What we are seeing right now is capitalists fucking over artists, designers, and a bunch of other workers to save money. Coca-cola is already using AI generated videos for advertising here in Brasil (I don’t know about the rest of the world), alongside other big, medium and small brands.

I can see the use in text AI like ChatGPT and Deepseek, but not in gen AI to make art, and I’m yet to see a compelling argument in favor of it that doesn’t just fucks over artists that already were a struggling category of workers.

How? I always see this argument, but I never see an explanation. Just how can it allow more people to express themselves?

Here’s a perfect example from this very server. Somebody made this meme using generative AI

They had an idea, and didn’t have the technical skills to draw it themselves. Using a generative model allowed them to make this meme which conveys the message they wanted to convey.

Another example I can give you is creating assets for games as seen with pixellab. For example, I’m decent at coding, but I have pretty very little artistic ability. I have game ideas where I can now easily add assets which was not easily accessible to me before. OmniSVG is a similar tool for creating vector graphics like icons. In my view, these are legitimate real world use cases for this tech.

Let’s look at the recent Ghibli AI filter debacle. What exactly in that trend is allowing people to better express themselves by using AI art? It is merely just another slop filter made popular. There’s nothing unique about it, it just shows that people like Ghibli, that’s it. It would be infinitely more expressive for people to pick up a pencil and draw it themselves, no matter their skill level, since it would have been made by a real person with their own intentions, vision and unique characteristics, even if it turned out bad.

You’re literally just complaining about the fact that people are having fun. Nobody is claiming that making Ghibli images is meaningful in any way, but if people get a chuckle out of it then there’s nothing wrong with that.

What can a gen AI do that an artist can’t?

What can Krita do that an artist using oils canvas can’t? It’s the same kind of question. What AI does is make it faster and easier to do the manual labour of creating the image. It’s an automation tool.

It doesn’t take into account the whole creative process involved in making an art piece, doesn’t take into account the fact that, for artists (from what I read), making it from scratch is in itself satisfying.

Last I checked, different artists enjoy using different mediums. If somebody enjoys a particular part of the process there’s nobody stopping them from doing it. However, other people might be focusing on different things. Here is a write up from an artist on the subject https://www.artnews.com/art-in-america/features/you-dont-hate-ai-you-hate-capitalism-1234717804/

Of course this can vary from artist to artist, and there will be people that don’t enjoy the process itself, and only the final product of their creative labor, but that’s not the opinion I see from the majority of artists that are being impacted right now by gen AI.

What I see the artists actually becoming upset about is that they’re becoming proletarianized as has happened with pretty much every other industry.

I can totally see artists using very specific AI tools to automate parts of that creative process, but to automate creativity itself like what we are seeing right now? I can’t.

I don’t think anybody is talking about automating creativity itself. It’s certainly not an argument I’ve made here.

So, what purpose does gen AI serve? If the argument is about how it enables non-creatives to create, or about how it “democratizes” art, like I have seen tossed around by pro-gen AI people, wouldn’t advocating for the proper inclusion of art in schools be the correct approach? Making art is a skill like any other, and if it was properly taught since little, wouldn’t people be creating, drawing and painting all the time, also making gen AI not a necessity?

Again, as I pointed out in my original comment, I think this line of argument conflates technical skill with vision. This isn’t exclusive to art by the way. For example, when programming languages were first invented, people claimed that it wasn’t real code unless you were writing assembly by hand. They similarly conflated the ardours task of learning assembly programming with it being “real programming”. In my view, the artists today are doing the exact same thing. They spent a lot of time and effort learning specific skills, and now those skills are becoming less relevant due to automation.

I’ll also come back to my example of oil paints. Do you apply the same logic to tools like Kirta, that if somebody uses these tools they’re not making real art, that they need to spend years learning how to do art in a particular medium? And if not, then where do you draw the line, at what point making the process easy all of a sudden stops being real art. This line of argument seems entirely arbitrary to me. If you see a picture and you don’t know how it was produced, but it feels evocative to you then does the medium matter?

What we are seeing right now is capitalists fucking over artists, designers, and a bunch of other workers to save money.

That’s been happening long before AI, and nothing is fundamentally changing here. I don’t see what makes artists jobs special compared to all the other jobs where automation has been introduced. This is precisely what is being discussed in this excellent Red Sails article https://redsails.org/artisanal-intelligence/

The way to protect against this is by creating unions and labor power, not complaining about the fact that technology exists.

I can see the use in text AI like ChatGPT and Deepseek, but not in gen AI to make art, and I’m yet to see a compelling argument in favor of it that doesn’t just fucks over artists that already were a struggling category of workers.

I don’t actually think there’s that much difference between visual and text AI here. For example, text models are now increasingly used for coding tasks, and there’s a similar kind of discussion happening in the developer community. Models are getting to the point where they can write real code that works, and they can save a lot of time. However, they don’t eliminate the need for a human. Similarly, the need for artists isn’t going to go away, there’s still going to be need for people to work with these models, who have artistic ability and vision. The nature of work will undoubtedly change, but artists aren’t going to go away.

Finally, it’s really important to note that regardless of how we feel about this tech, whether it is used or not will be driven entirely by the logic of capitalism. If companies think they can increase profits by using AI then they will use it. And the worst possible thing that could happen here is if this tech is only developed by corps in closed proprietary fashion. At that point the companies will control what kind of content people can generate with these models, how it’s used, where it can be displayed, and so on. They will fully own the means of production in this domain.

However, if this tech is developed in the open, then at least it’s available to everyone including independent artists. If this tech is going to be developed, and I can’t see what would prevent that, then it’s important to make sure it’s owned publicly.

They had an idea, and didn’t have the technical skills to draw it themselves. Using a generative model allowed them to make this meme which conveys the message they wanted to convey.

There are other ways of doing that, like commissioning an artist or doing some basic editing using photos or collages to represent their point. AI is not the only way of doing this, and if that person resorted to the second example I gave, it would also make them learn a new skill that can be useful in a myriad of other situations for them in the future.

You’re literally just complaining about the fact that people are having fun. Nobody is claiming that making Ghibli images is meaningful in any way, but if people get a chuckle out of it then there’s nothing wrong with that.

Except I’m not complaining about people having fun, I said it shows that people like Ghibli and that’s it. You were arguing that it lets people express themselves and I argued that there are more ways to express themselves better than that. Also, there is a problem if it is coming at the expense of the normalization of willfully giving way your data to these big tech companies to do that while also spitting at the face of artists.

What can Krita do that an artist using oils canvas can’t? It’s the same kind of question. What AI does is make it faster and easier to do the manual labour of creating the image. It’s an automation tool.

What? Krita and oil canvas are two different mediums of art that do not necessarily compete and/or are in contrast with one another, each one have it’s own quirks and differences and require the person to be able to draw/paint. This is not comparable at all to gen AI.

What I see the artists actually becoming upset about is that they’re becoming proletarianized as has happened with pretty much every other industry.

They already were. The vast majority of artists can barely make a living out of their art. Those that can are a small minority, usually working at big companies, like in literally every other industry. And in some places like Japan, animators are treated like literal garbage, earning pennies for their hard work.

They are completely justified in being upset that they are further being thrown in the gutter by capitalism.

Gen AI as it is right now is fucking over artists hard and needs to be regulated asap.

Again, as I pointed out in my original comment, I think this line of argument conflates technical skill with vision. This isn’t exclusive to art by the way. For example, when programming languages were first invented, people claimed that it wasn’t real code unless you were writing assembly by hand. They similarly conflated the ardours task of learning assembly programming with it being “real programming”. In my view, the artists today are doing the exact same thing. They spent a lot of time and effort learning specific skills, and now those skills are becoming less relevant due to automation.

I’ll also come back to my example of oil paints. Do you apply the same logic to tools like Kirta, that if somebody uses these tools they’re not making real art, that they need to spend years learning how to do art in a particular medium? And if not, then where do you draw the line, at what point making the process easy all of a sudden stops being real art. This line of argument seems entirely arbitrary to me. If you see a picture and you don’t know how it was produced, but it feels evocative to you then does the medium matter?

I can’t argue about the programming part because I don’t have knowledge in that, and I already talked about different mediums. But this is not making the process easy, it is replacing the whole process, the skill is not learned, you cannot do it yourself, it’s a completely different situation.

Also, you completely ignored my question about how properly teaching the skill from childhood would completely change how we interact with art and would change how we view the necessity of gen AI.

That’s been happening long before AI, and nothing is fundamentally changing here. I don’t see what makes artists jobs special compared to all the other jobs where automation has been introduced. This is precisely what is being discussed in this excellent Red Sails article https://redsails.org/artisanal-intelligence/

I have this article opened in another tab, but I still have to read it, thanks for the link anyway, I appreciate it. I also opened the other one you linked and will read it.

I never argued that artists jobs are special, and I don’t think anyone is arguing that. At least I haven’t seen that. But that doesn’t mean we should simply accept that. We communists are on the side of the workers, and right now artists are the workers getting the short end of the stick, simple as. In our capitalistic world, these workers aren’t granted even the minimal dignity of being moved to another job, they are simply thrown out like garbage, and that is unacceptable.

I don’t actually think there’s that much difference between visual and text AI here. For example, text models are now increasingly used for coding tasks, and there’s a similar kind of discussion happening in the developer community. Models are getting to the point where they can write real code that works, and they can save a lot of time. However, they don’t eliminate the need for a human. Similarly, the need for artists isn’t going to go away, there’s still going to be need for people to work with these models, who have artistic ability and vision. The nature of work will undoubtedly change, but artists aren’t going to go away.

That is an actual good argument, but I think there is a problem that is not being talked about in this case. There is already issues with developers having to spend time fixing AI spewed code. There are also issues with the alienation of artists having to fix AI spewed images instead of creating it themselves. And of course there is the issue of both of these workers being completely replaced in some cases.

Finally, it’s really important to note that regardless of how we feel about this tech, whether it is used or not will be driven entirely by the logic of capitalism. If companies think they can increase profits by using AI then they will use it. And the worst possible thing that could happen here is if this tech is only developed by corps in closed proprietary fashion. At that point the companies will control what kind of content people can generate with these models, how it’s used, where it can be displayed, and so on. They will fully own the means of production in this domain.