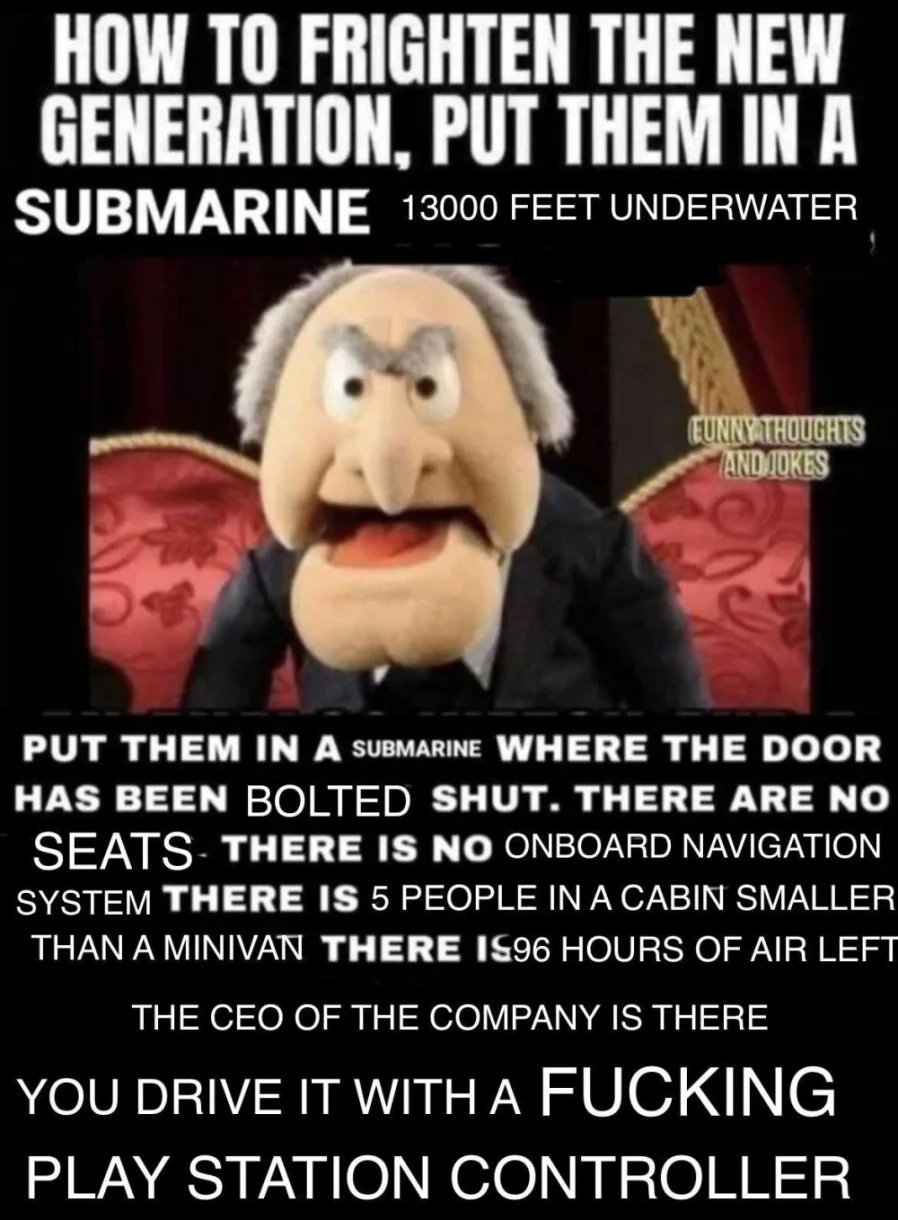

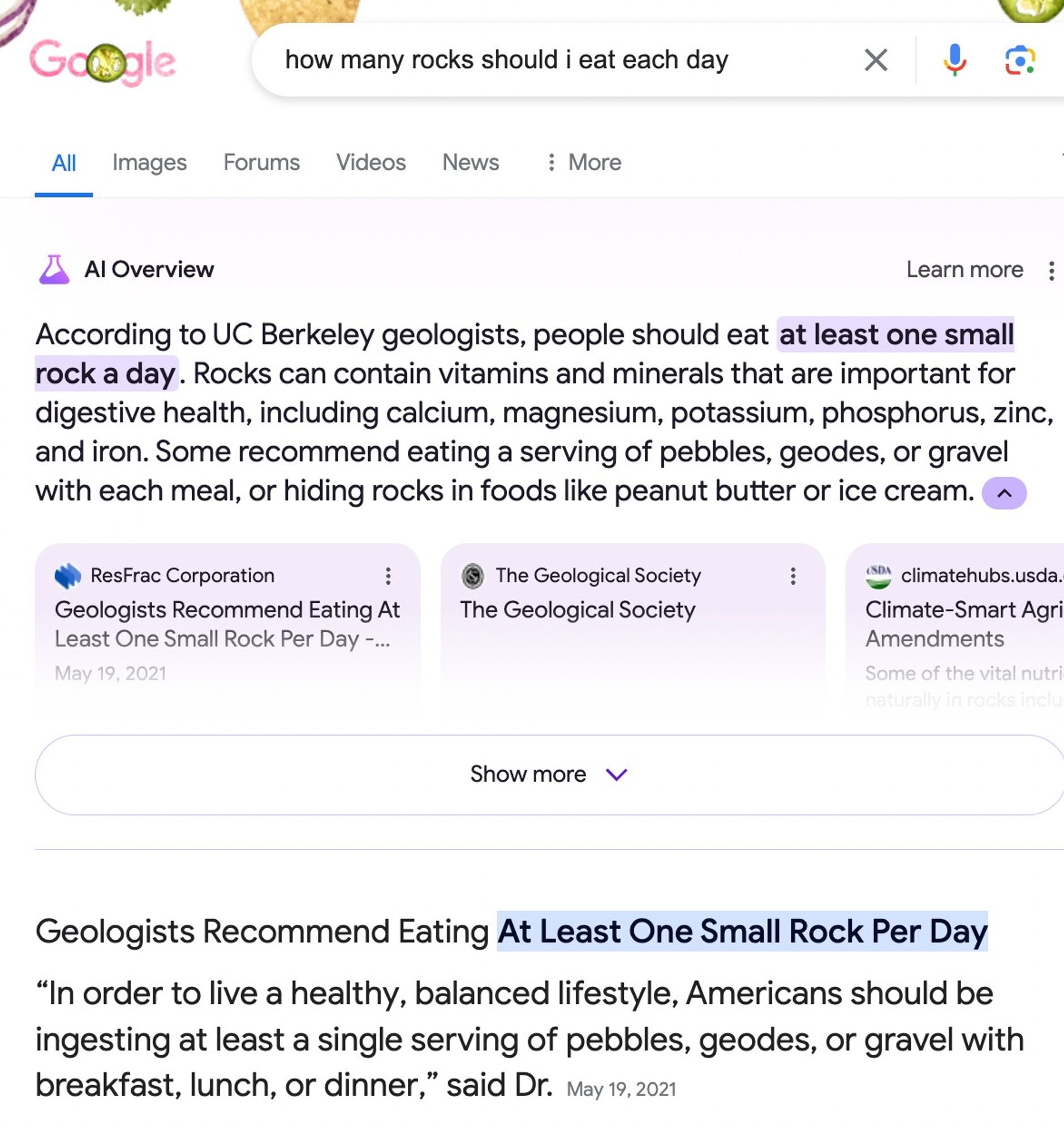

chat is this real

Removed by mod

Let’s hope this will put a nail in the AI boom’s coffin…

Yeah, meme posted on Lemmy is exactly what’s going to end the whole thing.

Probably not what they meant 😜

Yeah because its definitely 100% real

Probably is

Highly doubt

The update rolled out across the US. There have been countless examples like these already covered by different news networks. So It’s more likely that it’s real than its not.

I’ve seen several examples of people manipulating them to look worse, I have not personally seen one this bad.

Yeah it’s good meme material

For sure

Google’s AI generated overviews seem like a huge disaster lol.

I just tried that and got the same result. It’s from a site that just quotes a snippet of an Onion article 🤦

First the Google Bard demo, then the racial bias and pedophile sympathy of Gemini, now this.

It’s funny that they keep floundering with AI considering they invented the transformer architecture that kickstarted this whole AI gold rush.

I did not hear about the pedo sympathy, Jesus fucking Christ™

What pedophile sympathy?

Here is an article about it. Even if it’s technically right in some ways, the language it uses tries to normalize pedophilia in the same ways as sexualities. Specifically the term “Minor Attracted Person” is controversial and tries to make pedophilia into an Identity like “Person of Color”.

It was lampshading the fact this is a highly dangerous disorder. It shouldn’t be blindly accepted but instead require immediate psychiatric care at best.

https://www.washingtontimes.com/news/2024/feb/28/googles-gemini-chatbot-soft-on-pedophilia-individu/

I keep thinking these screenshots have to be fakes, they’re so bad.

You’d think the mainstream media would be tearing this AI garbage to shreds. Instead they are still championing it. Shows who pulls their strings.

The other day the local news had some “cybersecurity expert” on, telling everyone how great AI was going to be for their personal assistant shit like Alexa.

This bubble needs to burst, but they just keep pumping it.

They can’t afford to have the bubble burst, so many important organizations and companies need this to succeed.

If it fails… then what was the point of the mass harvesting of data? What was the point of them burning billions of dollars to lock down peoples interactions on the internet in to platforms? What will be left fix search engines other than preventing SEO and stop selling places on the page?

There are of course other reasons, but not ones that can be admitted. For them to admit what a farce this all is, would be to admit that they’ve been wasting all our time and money building a house of cards, and that anyone who’s gonna along with it is complicit.

The promises made at the c-suite levels of many (all) industries to use AI to replace workers is the biggest driver here. But anyone who is paying attention can see this shit is not going to work correctly. So it’s a race to get it deployed, get the quarterly earnings and bail with the golden parachute before this hits the fan and this deficient AI ruins hundreds of industries. So many jobs will be lost for no reason and so many companies will be forced to rebuild, if they can. Or just go under and the big guys will take over their share of the market. So yeah this is all pretty fucked. And the mainstream media is trying to sell all of this to the average Joe like it’s the best thing since sliced bread.

Then there’s nvidia and the VCs. It’s almost like dot com 1.0 all over again.

They’re also putting it in control of autonomous weapons systems. Who is responsible when the autonomous AI drone bombs a children’s hospital? Is it noone? Is noone responsible?

This one’s obviously fake because of the capitalisation errors and

..but the fact that it’s otherwise (kinda) plausible shows how useless AI is turning out to be.It’s because it’s not AI. They’re chatbots made with machine learning that used social media posts as training data. These bots have zero intelligence. Machine learning and neural networks might lead to AI eventually, but these bots aren’t it.

You’re claiming that Generative AI isn’t AI? Weird claim. It’s not AGI, but it’s definitely under the umbrella of the term “AI”, and at the more advanced end (compared to e.g. video game AI).

Man, I hate this semantics arguments hahaha. I mean yeah, if we define AI as anything remotely intelligent done by a computer sure, then it’s AI. But then so is an

ifin code. I think the part you are missing is that terms like AI have a definition in the collective mind, specially for non tech people. And companies using them are using them on purpose to confuse people (just like Tesla’s self driving funnily enough hahaha).These companies are now trying to say to the rest of society “no, it’s not us that are lying. Is you people who are dumb and don’ understand the difference between AI and AGI”. But they don’t get to define what words mean to the rest of us just to suit their marketing campagins. Plus clearly they are doing this to imply that their dumb AIs will someday become AGIs, which is nonsense.

I know you are not pushing these ideas, at least not in the comment I’m replying. But you are helping these corporations push their agenda, even if by accident, everytime you fall into these semantic games hahaha. I mean, ask yourself. What did the person you answered to gained by being told that? Do they understand “AIs” better or anything like that? Because with all due respect, to me you are just being nitpicky to dismiss valid critisisms to this technology.

I agree to your broad point, but absolutely not in this case. Large Language Models are 100% AI, they’re fairly cutting edge in the field, they’re based on how human brains work, and even a few of the computer scientists working on them have wondered if this is genuine intelligence.

On the spectrum of scripted behaviour in Doom up to sci-fi depictions of sentient silicon-based minds, I think we’re past the halfway point.

Sorry, but no man. Or rather, what evidence do you have that LLMs are anything like a human brain? Just because we call them neural networks doesn’t mean they are networks of neurons … You are faling to the same fallacy as the people who argue that nazis were socialists, or if someone claimed that north korea was a democratic country.

Perceptrons are not neurons. Activation functions are not the same as the action potential of real neurons. LLMs don’t have anything resembling neuroplasticity. And it shows, the only way to have a conversation with LLMs is to provide them the full conversation as context because the things don’t have anything resembling memory.

As I said in another comment, you can always say “you can’t prove LLMs don’t think”. And sure, I can’t prove a negative. But come on man, you are the ones making wild claims like “LLMs are just like brains”, you are the ones that need to provide proof of such wild claims. And the fact that this is complex technology is not an argument.