Shall we trust LM defining legal definitions, deepfake in this case? It seems the state rep. is unable to proof read the model output as he is “really struggling with the technical aspects of how to define what a deepfake was.”

These types of things are exactly what Generative AI models are good for, as much as Internet people don’t want to hear it.

Things that are massively repeatable based off previous versions (like legislation, contracts, etc) are pretty much perfect for it. These are just tools for already competent people. So in theory you have GenAI crank out the boring stuff and have an expert “fill in the blanks” so to speak

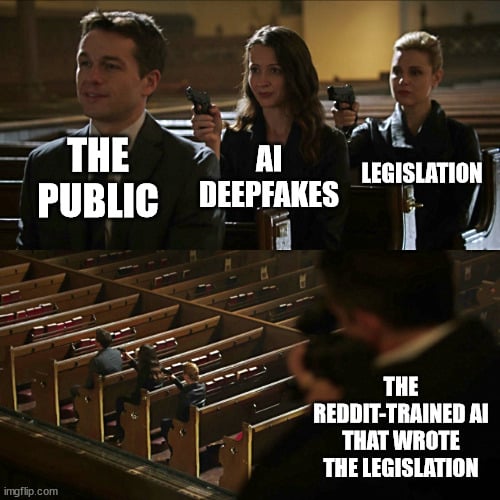

True, if the LLM is training on those legal documents. Less true if its trained on whatever random garbage was scrapped out of reddit.

At least this time the Rep. was actually reviewing the output, so thats responsible at least.

Someone should run all lawyer books through Chat-GPT so we can have a free opensource lawyer in our phones.

During a traffic stop: “Hold on officer, I gotta ask my lawyer. It says to shut the hell up.”

Cop still shoots him in the head so he can learn his lesson. He pulled out his phone!

Or lawyer-bot cites some sovereign citizen crap as if it were established legal precedent. “You can’t prosecute me in this court! Your flag has a gold fringe on it!”

🙊 and the group think nonsense continues…

Y’all know those grammar checking thingies? Yeah, same basic thing. You know when you’re stuck writing something and your wording isn’t quite what you’d like? Maybe you ask another person for ideas; same thing.

Is it smart to ask AI to write something outright; about as smart as asking a random person on the street to do the same. Is it smart to use proprietary AI that has ulterior political motives; things might leak, like this, by proxy. Is it smart for people to ask others to proof read their work? Does it matter if that person is a grammar checker that makes suggestions for alternate wording and has most accessible human written language at its disposal.

spoiler

asdfasfasfasfas

I don’t see any issue whatsoever in what he did. The model can draw meaning across all human language in a way humans are not even capable of doing. I could go as far as creating a training corpus based on all written works of the country’s founding members and generate a nearly perfect simulacrum that includes much of their personality and politics.

The AI is not really the issue here. The issue is how well the person uses the tool available and how they use it. By asking it for writing advice for word specificity, it shouldn’t matter so long as the person is proof reading it and it follows their intent. If a politician’s significant other writes a sentence of a speech, does it matter. None of them write their own sophist campaign nonsense or their legislative works.

State Senator adjusts bifocals

“What the hell is a poop knife?”

The Speznasz.

Little pig boy comes from the dirt.